Non-linear dimensionality reduction on extracellular waveforms reveals cell type diversity in premotor cortex

Abstract

Cortical circuits are thought to contain a large number of cell types that coordinate to produce behavior. Current in vivo methods rely on clustering of specified features of extracellular waveforms to identify putative cell types, but these capture only a small amount of variation. Here, we develop a new method (WaveMAP) that combines non-linear dimensionality reduction with graph clustering to identify putative cell types. We apply WaveMAP to extracellular waveforms recorded from dorsal premotor cortex of macaque monkeys performing a decision-making task. Using WaveMAP, we robustly establish eight waveform clusters and show that these clusters recapitulate previously identified narrow- and broad-spiking types while revealing previously unknown diversity within these subtypes. The eight clusters exhibited distinct laminar distributions, characteristic firing rate patterns, and decision-related dynamics. Such insights were weaker when using feature-based approaches. WaveMAP therefore provides a more nuanced understanding of the dynamics of cell types in cortical circuits.

Introduction

The processes involved in decision-making, such as deliberation on sensory evidence and the preparation and execution of motor actions, are thought to emerge from the coordinated dynamics within and between cortical layers (Chandrasekaran et al., 2017; Finn et al., 2019), cell types (Pinto and Dan, 2015; Estebanez et al., 2017; Lui et al., 2021; Kvitsiani et al., 2013), and brain areas (Gold and Shadlen, 2007; Cisek, 2012). A large body of research has described differences in decision-related dynamics across brain areas (Ding and Gold, 2012; Thura and Cisek, 2014; Roitman and Shadlen, 2002; Hanks et al., 2015) and a smaller set of studies has provided insight into layer-dependent dynamics during decision-making (Chandrasekaran et al., 2017; Finn et al., 2019; Chandrasekaran et al., 2019; Bastos et al., 2018). However, we currently do not understand how decision-related dynamics emerge across putative cell types. Here, we address this open question by developing a new method, WaveMAP, that combines non-linear dimensionality reduction and graph-based clustering. We apply WaveMAP to extracellular waveforms to identify putative cell classes and examine their physiological, functional, and laminar distribution properties.

In mice, and to some extent in rats, transgenic tools allow the in vivo detection of particular cell types (Pinto and Dan, 2015; Lui et al., 2021), whereas in vivo studies in primates are largely restricted to using features of the extracellular action potential (EAP) such as trough to peak duration, spike width, and cell firing rate (FR). Early in vivo monkey work (Mountcastle et al., 1969) introduced the importance of EAP features, such as spike duration and action potential (AP) width, in identifying cell types. These experiments introduced the concept of broad- and narrow-spiking neurons. Later experiments in the guinea pig (McCormick et al., 1985), cat (Azouz et al., 1997), and the rat (Simons, 1978; Barthó et al., 2004) then helped establish the idea that these broad- and narrow-spiking extracellular waveform shapes mostly corresponded to excitatory and inhibitory cells, respectively. These results have been used as the basis for identifying cell types in primate recordings (Johnston et al., 2009; Merchant et al., 2008; Merchant et al., 2012). This method of identifying cell types in mammalian cortex in vivo is widely used in neuroscience but it is insufficient to capture the known structural and transcriptomic diversity of cell types in the monkey and the mouse (Hodge et al., 2019; Krienen et al., 2020). Furthermore, recent observations in the monkey defy this simple classification of broad- and narrow-spiking cells as corresponding to excitatory and inhibitory cells, respectively. Three such examples in the primate that have resisted this principle are narrow-spiking pyramidal tract neurons in deep layers of M1 (Betz cells, Vigneswaran et al., 2011; Soares et al., 2017), narrow and broad spike widths among excitatory pyramidal tract neurons of premotor cortex (Lemon et al., 2021), and narrow-spiking excitatory cells in layer III of V1, V2, and MT (Constantinople et al., 2009; Amatrudo et al., 2012; Onorato et al., 2020; Kelly et al., 2019).

To capture a more representative diversity of cell types in vivo, more recent studies have incorporated additional features of EAPs (beyond AP width) such as trough to peak duration (Ardid et al., 2015), repolarization time (Trainito et al., 2019; Banaie Boroujeni et al., 2021), and triphasic waveform shape (Barry, 2015; Robbins et al., 2013). Although these user-specified methods are amenable to human intuition, they are insufficient to distinguish between previously identified cell types (Krimer et al., 2005; Vigneswaran et al., 2011; Merchant et al., 2012). It is also unclear how to choose these user-specified features in a principled manner (i.e. one set that maximizes explanatory power) as they are often highly correlated with one another. This results in different studies choosing between different sets of specified features each yielding different inferred cell classes (Trainito et al., 2019; Viskontas et al., 2007; Katai et al., 2010; Sun et al., 2021). Thus, it is difficult to compare putative cell types across literature. Some studies even conclude that there is no single set of specified features that is a reliable differentiator of type (Weir et al., 2014).

These issues led us to investigate techniques that do not require feature specification but are designed to find patterns in complex datasets through non-linear dimensionality reduction. Such methods have seen usage in diverse neuroscientific contexts such as single-cell transcriptomics (Tasic et al., 2018; Becht et al., 2019), in analyzing models of biological neural networks (Maheswaranathan et al., 2019; Kleinman et al., 2019), the identification of behavior (Bala et al., 2020; Hsu and Yttri, 2020; Dolensek et al., 2020), and in electrophysiology (Jia et al., 2019; Gouwens et al., 2020; Klempíř et al., 2020; Markanday et al., 2020; Dimitriadis et al., 2018).

Here, in a novel technique that we term WaveMAP, we combine a non-linear dimensionality reduction method (Universal Manifold Approximation and Projection [UMAP], McInnes et al., 2018) with graph community detection (Louvain community detection, (Blondel et al., 2008); we colloquially call ‘clustering’) to understand the physiological properties, decision-related dynamics, and laminar distribution of candidate cell types during decision-making. We applied WaveMAP to extracellular waveforms collected from neurons in macaque dorsal premotor cortex (PMd) in a decision-making task using laminar multi-channel probes (16 electrode ‘U-probes’). We found that WaveMAP significantly outperformed current approaches without need for user-specification of waveform features like trough to peak duration. This data-driven approach exposed more diversity in extracellular waveform shape than any constructed spike features in isolation or in combination. Using interpretable machine learning, we also show that WaveMAP picks up on nuanced and meaningful biological variability in waveform shape.

WaveMAP revealed three broad-spiking and five narrow-spiking waveform types that differed significantly in shape, physiological, functional, and laminar distribution properties. Although most narrow-spiking cells had the high maximum firing rates typically associated with inhibitory neurons, some had firing rates similar to broad-spiking neurons which are typically considered to be excitatory. The time at which choice selectivity (‘discrimination time’) emerged for many narrow-spiking cell classes was earlier than broad-spiking neuron classes—except for the narrow-spiking cells that had broad-spiking like maximum firing rates. Finally, many clusters had distinct laminar distributions that appear layer-dependent in a manner matching certain anatomical cell types. This clustering explains variability in discrimination time over and above previously reported laminar differences (Chandrasekaran et al., 2017). Together, this constellation of results reveals previously undocumented relationships between waveform shape, physiological, functional, and laminar distribution properties that are missed by traditional approaches. Our results provide powerful new insights into how candidate cell classes can be better identified and how these types coordinate with specific timing, across layers, to shape decision-related dynamics.

Results

Task and behavior

Two male rhesus macaques (T and O) were trained to perform a red-green reaction time decision-making task (Figure 1A). The task was to discriminate the dominant color of a central static red-green checkerboard cue and to report their decision with an arm movement towards one of two targets (red or green) on the left or right (Figure 1A).

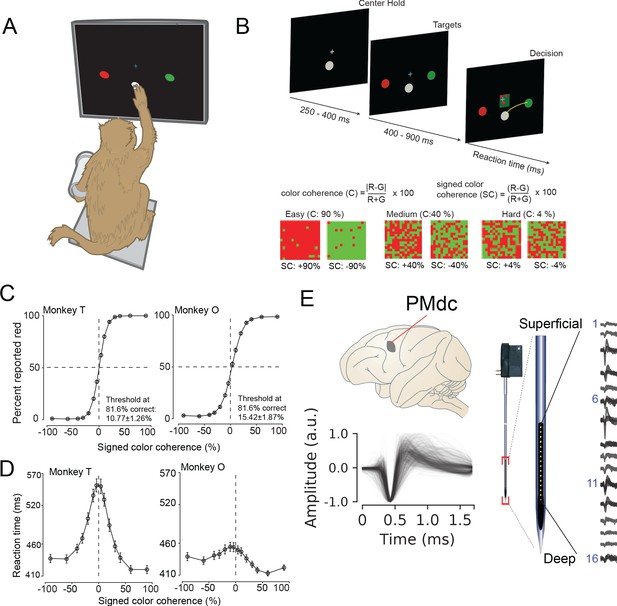

Recording locations, waveform shapes, techniques, task, and discrimination behavior.

(A) An illustration of the behavioral setup in the discrimination task. The monkey was seated with one arm free and one arm gently restrained in a plastic tube via a cloth sling. An infrared-reflecting (IR) bead was taped to the forefinger of the free hand and was used in tracking arm movements. This gave us a readout of the hand’s position and allowed us to mimic a touch screen. (B) A timeline of the decision-making task (top). At bottom is defined the parametrization of difficulty in the task in terms of color coherence and signed color coherence (SC). (C) Average discrimination performance and (D) Reaction time (RT) over sessions of the two monkeys as a function of the SC of the checkerboard cue. RT plotted here includes both correct and incorrect trials for each session and then averaged across sessions. Gray markers show measured data points along with 2 × S.E.M. estimated over sessions. For many data points in (C), the error bars lie within the marker. X-axes in both (C), (D) depict the SC in %. Y-axes depict the percent responded red in (C) and RT in (D). Also shown in the inset of (C) are discrimination thresholds (mean ± S.D. over sessions) estimated from a Weibull fit to the overall percent correct as a function of coherence. The discrimination threshold is the color coherence at which the monkey made 81.6% correct choices. Seventy-five sessions for monkey T (128,989 trials) and 66 sessions for monkey O (108,344 trials) went into the averages. (E) The recording location in caudal PMd (top); normalized and aligned isolated single-unit waveforms (n = 625, 1.6 ms each, bottom); and schematic of the 16-channel Plexon U-probe (right) used during the behavioral experiment.

The timeline of the task is as follows: a trial began when the monkey touched the center target and fixated on a cross above it. After a short randomized period, two targets red and green appeared on the either side of the center target (see Figure 1B, top). The target configuration was randomized: sometimes the left target was red and the right target was green or vice versa. After another short randomized target viewing period, a red-green checkerboard appeared in the center of the screen with a variable mixture of red and green squares.

We parameterized the variability of the checkerboard by its signed color coherence and color coherence. The signed color coherence (SC) provides an estimate of whether there are more red or green squares in the checkerboard. Positive SC indicates the presence of more red squares, whereas negative SC indicates more green squares. SC close to zero (positive or negative) indicates an almost even number of red or green squares (Figure 1B, bottom). The coherence (C) provides an estimate of the difficulty of a stimulus. Higher coherence indicates that there is more of one color than the other (an easy trial) whereas a lower coherence indicates that the two colors are more equal in number (a difficult trial).

Our monkeys demonstrated the range of behaviors typically observed in decision-making tasks: monkeys made more errors and were slower for lower coherence checkerboards compared to higher coherence checkerboards (Figure 1C,D). We used coherence, choice, and reaction times (RT) to analyze the structure of decision-related neural activity.

Recordings and single neuron identification

While monkeys performed this task, we recorded single neurons from the caudal aspect of dorsal premotor cortex (PMd; Figure 1E, top) using single tungsten (FHC electrodes) or linear multi-contact electrodes (Plexon U-Probes, 625 neurons, 490 U-probe waveforms; Figure 1E, right) and a Cerebus Acquisition System (Blackrock Microsystems). In this study, we analyzed the average EAP waveforms of these neurons. All waveforms were analyzed after being filtered by a fourth-order high-pass Butterworth filter (250 Hz). A 1.6 ms snippet of the waveform was recorded for each spike and used in these analyses, a duration longer than many studies of waveform shape (Merchant et al., 2012).

We restricted our analysis to well-isolated single neurons identified through a combination of careful online isolation combined with offline spike sorting (see Methods section: Identification of single neurons during recordings). Extracellular waveforms were isolated as single neurons by only accepting waveforms with minimal ISI violations (1.5% < 1.5 ms). This combination of online vigilance, combined with offline analysis, provides us the confidence to label these waveforms as single neurons.

We used previously reported approaches to align, average, and normalize spikes (Kaufman et al., 2013; Snyder et al., 2016). Spikes were aligned in time via their depolarization trough and normalized between −1 and 1. ‘Positive spiking’ units with large positive amplitude pre-hyperpolarization spikes were dropped from the analysis due to their association with dendrites and axons (Gold et al., 2009; Barry, 2015; Sun et al., 2021). Recordings were pooled across monkeys to increase statistical power for WaveMAP.

Non-linear dimensionality reduction with graph clustering reveals robust low-dimensional structure in extracellular waveform shape

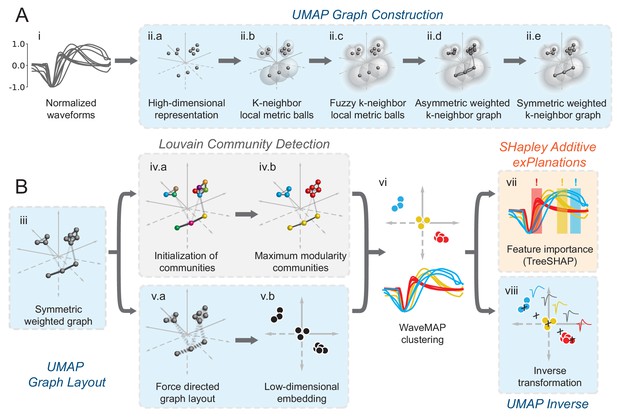

In WaveMAP (Figure 2), we use a three-step strategy for the analysis of extracellular waveforms: We first passed the normalized and trough-aligned waveforms (Figure 2A–i) into UMAP to obtain a high-dimensional graph (Figure 2A–ii; McInnes et al., 2018). Second, we used this graph (Figure 2B–iii) and passed it into Louvain clustering (Figure 2B-iv, Blondel et al., 2008), to delineate high-dimensional clusters. Third, we used UMAP to project the high-dimensional graph into two dimensions (Figure 2B–v). We colored the data points in this projected space according to their Louvain cluster membership found in step two to arrive at our final WaveMAP clusters (Figure 2B–vi). We also analyzed the WaveMAP clusters using interpretable machine learning (Figure 2B–vii) and also an inverse transform of UMAP (Figure 2B–viii). A detailed explanation of the steps associated with WaveMAP is available in the methods, and further mathematical details of WaveMAP are available in the Supplementary Information.

Schematic of WaveMAP.

(A) WaveMAP begins with UMAP which projects high-dimensional data into lower dimension while preserving local and global relationships (see Figure 2—figure supplement 1A for an intuitive diagram). Normalized average waveforms from single units (i) are passed to UMAP (McInnes et al., 2018) which begins with the construction of a high-dimensional graph (ii). In the high-dimensional space (ii.a), UMAP constructs a distance metric local to each data point (ii.b). The unit ball (ball with radius of one) of each local metric stretches to the 1st-nearest neighbor. Beyond this unit ball, local distances decrease (ii.c) according to an exponential distribution that is scaled by the local density. This local metric is used to construct a weighted graph with asymmetric edges (ii.d). The 1-nearest neighbors are connected by en edge of weight 1.0. For the next -nearest neighbors, this weight then falls off according to the exponential local distance metric (in this diagram with some low weight connections omitted for clarity). These edges, and , are made symmetric according to (ii.e). (B) The high-dimensional graph (iii) captures latent structure in the high-dimensional space. We can use this graph in Louvain community detection (Louvain, iv) (Blondel et al., 2008) to find clusters (see Figure 2—figure supplement 1B for an intuitive diagram). In Louvain, each data point is first initialized as belonging to its own ‘community’ (iv.a, analogous to a cluster in a metric space). Then, in an iterative procedure, each data point joins neighboring communities until a measure called ‘modularity’ is maximized (iv.b, see Supplemental Information for a definition of modularity). Next, data points in the same final community are aggregated to a single node and the process repeats until the maximal modularity is found on this newly aggregated graph. This process then keeps repeating until the maximal modularity graph is found and the final community memberships are passed back to the original data points. We can also use this graph to find a low-dimensional representation through a graph layout procedure (v). The graph layout proceeds by finding a ‘low energy’ configuration that balances attractive (shown as springs in v.a) and repulsive (not shown) forces between pairs of points as a function of edge weight or lack thereof. This procedure iteratively minimizes the cross-entropy between the low-dimensional and high-dimensional graphs (v.b). The communities found through Louvain are then combined with the graph layout procedure to arrive at a set of clusters in a low-dimensional embedded space (vi). These clusters (vi, top) can be used to classify the original waveforms (vi, bottom). To investigate ‘why’ these data points became clusters, each cluster is examined for locally (within-cluster) important features (SHAP Lundberg and Lee, 2017), (vii) and globally important trends (UMAP inverse transform, viii). Not shown is the classifier SHAP values are calculated from. The diagrams for the graph construction and layout are based on UMAP documentation and the diagram for Louvain community detection is based on Blondel et al., 2008. Figure 2—figure supplement 1: An intuitive diagram of local and global distance preservation in UMAP and a schematic of the Louvain clustering process.

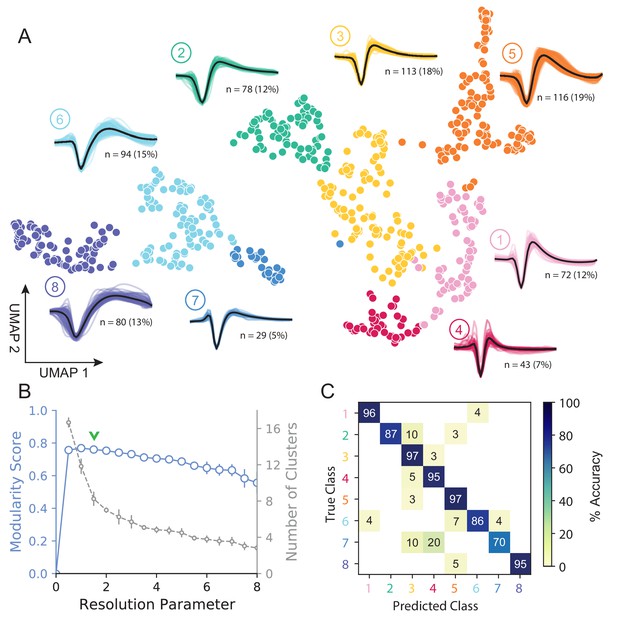

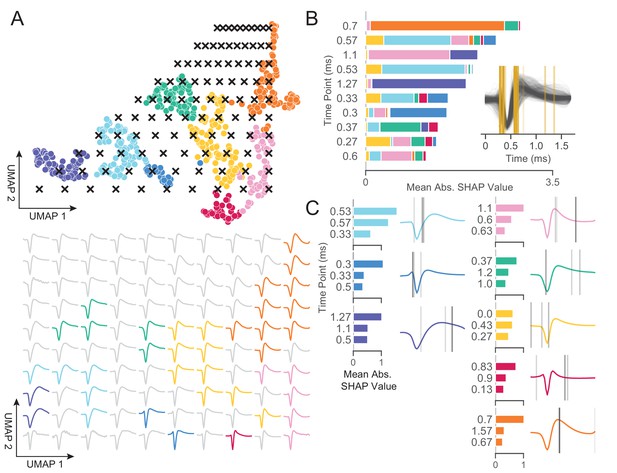

Figure 3A shows how WaveMAP provides a clear organization without the need for prior specification of important features. For expository reasons, and to link to prior literature (McCormick et al., 1985; Connors et al., 1982), we use the trough to peak duration to loosely subdivide these eight clusters into ‘narrow-spiking’ and ‘broad-spiking’ cluster sets. The broad-spiking clusters had a trough to peak duration of 0.74 ± 0.24 ms (mean ± S.D.) and the narrow-spiking clusters had a trough to peak duration of 0.36 ± 0.07 ms (mean ± S.D.). The narrow-spiking neurons are shown in warm colors (including green) at right in Figure 3A and the broad-spiking neurons are shown in cool colors at left in the same figure. The narrow-spiking set was composed of five clusters with ‘narrow-spiking’ waveforms (clusters ①, ②, ③, ④, ⑤) and comprised ∼12%, ∼12%, ∼18%, ∼7%, and ∼19% (n = 72, 78, 113, 43, and 116) respectively of the total waveforms, for ∼68% of total waveforms. The broad-spiking set was composed of three ‘broad-spiking’ waveform clusters (⑥, ⑦, and ⑧) comprising ∼13%, ∼5%, and ∼15% (n = 80, 29, and 94) respectively and collectively ∼32% of total waveforms.

UMAP and Louvain clustering reveal a robust diversity of averaged single-unit waveform shapes.

(A) Scatter plot of normalized EAP waveforms in UMAP space colored by Louvain cluster membership. Adjacent to each numbered cluster (① through ⑧) is shown all member waveforms and the average waveform shape (in black). Each waveform is 1.6 ms. Percentages do not add to 100% due to rounding. (B) Louvain clustering resolution parameter versus modularity score (in blue, axis at left) and the number of clusters (communities) found (in gray, axis at right). This was averaged over 25 runs for WaveMAP using 25 random samples and seeds of 80% of the full dataset at each resolution parameter from 0 to 8 in 0.5 unit increments (a subset of the data was used to obtain error bars). Each data point is the mean ± S.D. with many S.D. bars smaller than the marker size. Green chevrons indicate the resolution parameter of 1.5 chosen and its position along both curves. (C) The confusion matrix of a gradient boosted decision tree classifier with 5-fold cross-validation and hyperparameter optimization. The main diagonal shows the held-out classification accuracy for each cluster and the off-diagonals show the misclassification rates for each cluster to each other cluster. The average accuracy across all clusters was 91%. Figure 3—figure supplement 1: A stability analysis of WaveMAP clustering showing solutions are stable with respect to random seed, random data subset, and in an ensembled version of Louvain. Figure 3—figure supplement 2: Different amplitude normalizations have similar effect but this processing is essential to WaveMAP extracting meaningful structure. Figure 3—figure supplement 3: Pre-processing waveform data with principal component analysis does not alter WaveMAP results.

The number of clusters identified by WaveMAP is dependent on the resolution parameter for Louvain clustering. A principled way to choose this resolution parameter is to use the modularity score (a measure of how tightly interconnected the members of a cluster are) as the objective function to maximize. We chose a resolution parameter of 1.5 that maximized modularity score while ensuring that we did not overly fractionate the dataset (n < 20 within a cluster; Figure 3A,B, and columns of Figure 3—figure supplement 1A). Additional details are available in the ‘Parameter Choice’ section of the Supplementary Information.

Louvain clustering with this resolution parameter of 1.5 identified eight clusters in total (Figure 3A). Note, using a slightly higher resolution parameter (2.0), a suboptimal solution in terms of modularity, led to seven clusters (Figure 3—figure supplement 1A). The advantage of Louvain clustering is that it is hierarchical and choosing a slightly larger resolution parameter will only merge clusters rather than generating entirely new cluster solutions. Here, we found that the higher resolution parameter merged two of the broad-spiking clusters ⑥ and ⑦ while keeping the rest of the clusters largely intact and more importantly, did not lead to material changes in the conclusions of analyses of physiology, decision-related dynamics, or laminar distribution described below. Finally, an alternative ensembled version of the Louvain clustering algorithm (ensemble clustering for graphs [ECG] Poulin and Théberge, 2018), which requires setting no resolution parameter, produced a clustering almost exactly the same as our results (Figure 3—figure supplement 1C).

To validate that WaveMAP finds a ‘real’ representation of the data, we examined if a very different method could learn the same representation. We trained a gradient boosted decision tree classifier (with a softmax multi-class objective) on the exact same waveform data (vectors of 48 time points, 1.6 ms time length) passed to WaveMAP and used a test-train split with k-fold cross-validation applied to the training data. Hyperparameters were tuned with a 5-fold cross-validated grid search on the training data and final parameters shown in Table 1. After training, the classification was evaluated against the held-out test set (which was never seen in model training/tuning) and the accuracy, averaged over clusters, was 91%. Figure 3C shows the associated confusion matrix which contains accuracies for each class along the main diagonal and misclassification rates on the off-diagonals. Such successful classification at high levels of accuracy was only possible because there were ‘generalizable’ clusterings of similar waveform shapes in the high-dimensional space revealed by UMAP.

Non-default model hyperparameters used.

| Function | Function name | Parameters | Value |

|---|---|---|---|

| UMAP Algorithm (Python) | umap.UMAP | n_neighbors min_dist random_state metric | 20 0.1 42 ’euclidean’ |

| Louvain Clustering (Python) | cylouvain.best_partition | resolution | 1.5 |

| UMAP Gradient Boosted Decision Tree (Python) | xgboost.XGBClassifier | max_depth min_child_weight n_estimators learning_rate objective rand_state | 4 2.5 100 0.3 ’multi:softmax’ 42 |

| GMM Gradient Boosted Decision Tree (Python) | xgboost.XGBClassifier | max_depth min_child_weight n_estimators learning_rate objective seed | 10 2.5 110 0.05 ’multi:softmax’ 42 |

| 8-Class GMM Gradient Boosted Decision Tree (Python) | xgboost.XGBClassifier | max_depth min_child_weight n_estimators learning_rate objective seed | 2 1.5 100 0.3 ’multi:softmax’ 42 |

| Gaussian Mixture Model (MATLAB) | fitgmdist | k start replicates statset(’MaxIter’) | 4 ’randsample’ 50 200 |

| DBSCAN (Python) | sklearn.cluster.DBSCAN | eps min_samples | 3 15 |

We find that cluster memberships found by WaveMAP are stable with respect to random seed when resolution parameter and n_neighbors parameter are fixed. This stability of WaveMAP clusters with respect to random seed is because much of the variability in UMAP layout is the result of the projection process (Figure 2B–v.a). Louvain clustering operates before this step on the high-dimensional graph generated by UMAP which is far less sensitive to the random seed. Thus, the actual layout of the projected clusters might differ subtly according to random seed, but the cluster memberships largely do not (see Supplementary Information and columns of Figure 3—figure supplement 1A). Here, we fix the random seed purely for visual reproducibility purposes in the figure. Thus, across different random seeds and constant resolution, the clusters found by WaveMAP did not change because the graph construction was consistent across random seed at least on our dataset (Figure 3—figure supplement 1A).

We also found that WaveMAP was robust to data subsetting (randomly sampled subsets of the full dataset, see Supplementary Information Tibshirani and Walther, 2005), unlike other clustering approaches (Figure 3—figure supplement 1B, green, Figure 4—figure supplement 1). We applied WaveMAP to 100 random subsets each from 10% to 90% of the full dataset and compared this to a ‘reference’ clustering produced by the procedure on the full dataset. WaveMAP was consistent in both cluster number (Figure 3—figure supplement 1B, red) and cluster membership (which waveforms were frequently ‘co-members’ of the same cluster; Figure 3—figure supplement 1B, green).

Finally, our results were also robust to another standard approach to normalizing spike waveforms: normalization to trough depth. This method exhibited the same stability in cluster number (Figure 3—figure supplement 2C), and also showed no differences in downstream analyses (Figure 3—figure supplement 2D). Without amplitude normalization, interesting structure was lost (Figure 3—figure supplement 2E) because UMAP likely attempts to explain both waveform amplitude and shape (shown as a smooth gradient in the trough to peak height difference Figure 3—figure supplement 2F). In addition, common recommendations to apply PCA before non-linear dimensionality reduction were not as important for our waveform dataset, which was fairly low-dimensional (first three PC’s explained 94% variance). Projecting waveforms into a three-dimensional PC-space before WaveMAP produced a clustering very similar to data without this step (Figure 3—figure supplement 3).

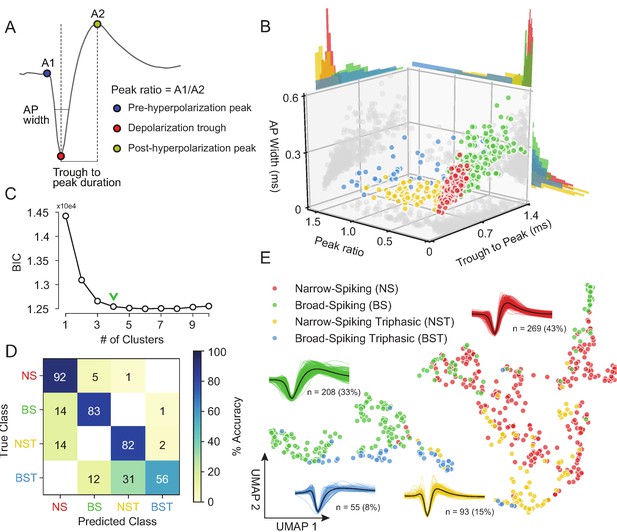

Traditional clustering methods with specified features sub-optimally capture waveform diversity

Our unsupervised approach (Figure 3) generates a stable clustering of waveforms. However, is our method better than the traditional approach of using specified features (Snyder et al., 2016; Trainito et al., 2019; Kaufman et al., 2010; Kaufman et al., 2013; Mitchell et al., 2007; Barthó et al., 2004; Merchant et al., 2008; Merchant et al., 2012; Song and McPeek, 2010; Simons, 1978; Johnston et al., 2009)? To compare how WaveMAP performs relative to traditional clustering methods built on specified features, we applied a Gaussian mixture model (GMM) to the three-dimensional space produced by commonly used waveform features. In accordance with previous work, the features we chose (Figure 4A) were action potential (AP) width of the spike (width in milliseconds of the full-width half minimum of the depolarization trough Vigneswaran et al., 2011); the peak ratio the ratio of pre-hyperpolarization peak (A1) to the post-hyperpolarization peak (A2) Barry, 2015; and the trough to peak duration (time in ms from the depolarization trough to post-hyperpolarization peak) which is the most common feature used in analyses of extracellular recordings (Snyder et al., 2016; Kaufman et al., 2013; Merchant et al., 2012).

Gaussian mixture model clustering on specified features fails to capture the breadth of waveform diversity.

(A) The three EAP waveform landmarks used to generate the specified features passed to the GMM on a sample waveform.  is the pre-hyperpolarization peak (A1);

is the pre-hyperpolarization peak (A1);  is the depolarization trough; and

is the depolarization trough; and  is the post-hyperpolarization peak (A2). (B) A three-dimensional scatter plot with marginal distributions of waveforms and GMM classes on the three specified features in (A). Narrow-spiking (NS) are in red; broad-spiking (BS) in green; narrow-spiking triphasic (NST) in yellow; and broad-spiking triphasic (BST) types are in blue. Trough to peak was calculated as the time between

is the post-hyperpolarization peak (A2). (B) A three-dimensional scatter plot with marginal distributions of waveforms and GMM classes on the three specified features in (A). Narrow-spiking (NS) are in red; broad-spiking (BS) in green; narrow-spiking triphasic (NST) in yellow; and broad-spiking triphasic (BST) types are in blue. Trough to peak was calculated as the time between  and

and  ; peak ratio was determined as the ratio between the heights of

; peak ratio was determined as the ratio between the heights of  and

and  (A1/A2); and AP width was determined as the width of the depolarization trough

(A1/A2); and AP width was determined as the width of the depolarization trough  using the MLIB toolbox (Stuttgen, 2019). (C) The optimal cluster number in the three-dimensional feature space in (B) was determined to be four clusters using the Bayesian information criterion (BIC) (Trainito et al., 2019). The number of clusters was chosen to be at the ‘elbow’ of the BIC curve (green chevron). (D) A confusion matrix for a gradient boosted decision tree classifier with 5-fold cross-validation with hyperparameter optimization. The main diagonal contains the classification accuracy percentages across the four GMM clusters and the off-diagonal contains the misclassification rates. The average accuracy across classes was 78%. (E) The same scatter plot of normalized EAP waveforms in UMAP space as in Figure 3A but now colored by GMM category. Figure 4—figure supplement 1: We show that WaveMAP clusterings are more consistent across random data subsets than either DBSCAN on t-SNE or a GMM on PCA. Figure 4—figure supplement 2: GMMs fail to full capture the latent structure in the waveforms.

using the MLIB toolbox (Stuttgen, 2019). (C) The optimal cluster number in the three-dimensional feature space in (B) was determined to be four clusters using the Bayesian information criterion (BIC) (Trainito et al., 2019). The number of clusters was chosen to be at the ‘elbow’ of the BIC curve (green chevron). (D) A confusion matrix for a gradient boosted decision tree classifier with 5-fold cross-validation with hyperparameter optimization. The main diagonal contains the classification accuracy percentages across the four GMM clusters and the off-diagonal contains the misclassification rates. The average accuracy across classes was 78%. (E) The same scatter plot of normalized EAP waveforms in UMAP space as in Figure 3A but now colored by GMM category. Figure 4—figure supplement 1: We show that WaveMAP clusterings are more consistent across random data subsets than either DBSCAN on t-SNE or a GMM on PCA. Figure 4—figure supplement 2: GMMs fail to full capture the latent structure in the waveforms.

The result of the GMM applied to these three measures is shown in Figure 4B. This method identified four waveform clusters that roughly separated into broad-spiking (BS, ∼33%, n = 208), narrow-spiking (NS, ∼43%, n = 269), broad-spiking triphasic (BST, ∼9%, n = 55), and narrow-spiking triphasic (NST, ∼15%, n = 93) (Figure 4B). Triphasic waveforms, thought to be neurons with myelinated axons or neurites (Barry, 2015; Robbins et al., 2013; Deligkaris et al., 2016; Bakkum et al., 2013; Sun et al., 2021), contain an initial positive spike before the trough and can be identified by large peak ratios (Figure 4A). These GMM clusters are similar to those obtained from other clusterings of EAP’s in macaque cortex (Gur et al., 1999; Trainito et al., 2019). We selected four clusters by examining the Bayesian information citerion (BIC) statistic as a function of the number of clusters and identified the cluster number at the elbow (green chevron in Figure 4C).

To compare the generalizability of this representation with the representation provided by UMAP, we trained the same decision tree classifier on the waveform data (after separate hyperparameter tuning, Table 1) but this time using the four GMM classes as target labels. After training, the accuracy across all four classes averaged ∼78% with no classification accuracy over 95% and misclassifications between every class (Figure 4D). The classifier trained on specified features under-performed the classifier trained on the whole waveform found by WaveMAP. In WaveMAP, the individual classification accuracy of most classes exceeded 95% with few misclassifications between groups even though there were double the number of clusters. This result suggests that the clusters based on specified features are less differentiable than WaveMAP clusters even when a much lower cluster number is considered.

This deficit can be understood as an inability of the GMM to fully capture the latent structure of the data. If we examine the gray data point shadows (Figure 4B), no features contain clear clusters and neither do they contain Gaussian distributions which is an assumption of the GMM model. Examining the marginal distributions in Figure 4B, none of the features induce a clear separability between the clusters alone or in conjunction. Furthermore, the reproducible clusters found by WaveMAP are linearly inseparable in the feature space of the three GMM features (Figure 4—figure supplement 2A). Note, this is not an artifact of using a lower cluster number in the GMM as opposed to the eight found by WaveMAP. Even if the GMM is instantiated with eight clusters (Figure 4—figure supplement 2B), a classifier is still unable to generalize this clustering with even modest accuracy (average of 56% across clusters; Figure 4—figure supplement 2C) even if the waveforms shapes found by the GMM with eight clusters seem somewhat sensible (Figure 4—figure supplement 2D). In fact, across all cluster numbers (n_components from 2 to 16), a classifier tuned for the GMM performed more poorly on the GMM labels than a WaveMAP projection with the same number of clusters (Figure 4—figure supplement 2E, in red). Tuning WaveMAP parameters that induce different cluster numbers, whether n_neighbors (in dark blue) or resolution (in light blue), had little effect on classifier performance (Figure 4—figure supplement 2E, in blues). WaveMAP yielded mappings that were more generalizable than a GMM on features across every number of clusters and both parameters investigated. Thus, it is a deficit of the GMM on constructed feature-based approach to capture the full diversity of waveforms, especially at high cluster number, and not a peculiarity of the model parameters chosen or number of clusters induced.

We also investigated the representation of specified features in the projected UMAP space. We color coded the waveforms in UMAP, in Figure 5—figure supplement 1, according to each point’s feature values using the same features as in Figure 4 (Figure 5—figure supplement 1A): AP width (Figure 5—figure supplement 1B), trough to peak duration (Figure 5—figure supplement 1C), and peak ratio (Figure 5—figure supplement 1D). We find that WaveMAP implicitly captures each of these specified features shown as a smooth gradient of values. Our method also exposes the correlation between certain specified features: the gradient between trough to peak duration and AP width points point roughly in the same direction so thus both features are highly correlated. This correlation between features exposes their redundancy and is another reason why traditional approaches fail to capture the full diversity of waveform shapes.

To obtain a clearer picture of how WaveMAP captures latent structure missed by specified features, we color the points in UMAP space by their GMM cluster identity in Figure 4E. Here, WaveMAP is able to recapitulate the same structure observed by specified features as a gradient from broad- to narrow-spiking along the UMAP-1 direction. Our technique also captures the transition from triphasic to biphasic along the UMAP-2 direction. WaveMAP is also able to find clusters that occupy an intermediate identity between GMM classes. For instance, WaveMAP cluster ② (Figure 3A) is nearly equal parts broad- and narrow-spiking in the GMM clustering (Figure 4E). If a GMM were used, ② would be split between two classes despite it having a distinct waveform shape characterized by a small pre-hyperpolarization peak, a moderate post-hyperpolarization peak, and relatively constant repolarization slope.

WaveMAP interpretably recapitulates and expands upon known waveform features

We have established that WaveMAP has the ability to discover extracellular waveform clusters, but a common contention with such non-linear methods is that they are uninterpretable. Here, using an interpretable machine learning approach, we show that WaveMAP produces sensible results (Molnar, 2020; Azodi et al., 2020). To identify the features our algorithm is paying attention to, we first computed the inverse mapping of the UMAP transform to probe the projected space in a systematic way. Second, we leverage the gradient boosted decision tree classifier in Figure 3C and used a decision tree implementation (path-dependent TreeSHAP Lundberg et al., 2018) of SHapley Additive exPlanations (SHAP values Lundberg and Lee, 2017; Lundberg et al., 2020) to reveal what waveform features are implicitly used to differentiate clusters.

To quantify the differences between Louvain clusters, we applied a grid of ‘test points’ to the UMAP projected space (Figure 5A, top) and inverted the transform at each location; each of these test points is a coordinate on a grid (black x’s) and shows the waveform associated with every point in the projected space (Figure 5A, bottom). On the bottom of Figure 5A is shown the waveform that corresponds to each point in UMAP space color-coded to the nearest cluster or to gray if there were no nearby clusters. As UMAP-1 increases, there is a smooth transition in the sign of the inflection of the repolarization slope (the second derivative) from negative to positive (slow to fast repolarization rate). That is, the post-hyperpolarization peak becomes more sharp as we increase in the UMAP-1 direction. As UMAP-2 increases, we see a widening of the post-hyperpolarization slope distinct from the change in its inflection (UMAP-1). These two UMAP dimensions recapitulate the known importance of hyperpolarization properties in clustering waveforms. Both hyperpolarization rate (proportional to trough to peak width) and hyperpolarization slope inflection (proportional to repolarization time) are separate but highly informative properties (Trainito et al., 2019; Ardid et al., 2015). Furthermore, since repolarization rate and post-hyperpolarization width associate with different UMAP dimensions, this implies that these two processes are somewhat independent factors shaping the waveform. Repolarization rates are goverened by potassium channel dynamics and may play an important in waveform shape (Soares et al., 2017). Thus, WaveMAP not only finds an interpretable and smoothly varying low-dimensional space it also offers biological insights; in this case, how cell types might differ according to channel protein expression and dynamics.

WaveMAP provides interpretable representations that both validate and extend known and unknown features importances.

(A) WaveMAP applied to the EAP’s as in Figure 3A but overlaid with a grid of test points (black x’s, top) spanning the embedded space. At bottom, the inverse UMAP transform is used to show the predicted waveform at each test point. For each x above, the predicted waveform is shown, plotted, and assigned the color of the nearest cluster or in gray if no cluster is nearby. Note that there exists instability in the waveform shape (see waveforms at corners) as test points leave the learned embedded space. (B) The mean absolute SHAP values for 10 time points along all waveforms subdivided according to the SHAP values contributed by each WaveMAP cluster. These SHAP values were informed by applying path-dependent TreeSHAP to a gradient boosted decision tree classifier trained on the waveforms with the WaveMAP clusters as labels. In the inset, all waveforms are shown and in gold are shown the time points for which the SHAP values are shown on the left. Each vertical line is such that the most opaque line contains the greatest SHAP value across WaveMAP clusters; the least opaque, the smallest SHAP value. (C) Each averaged WaveMAP waveform cluster is shown with the three time points containing the greatest SHAP values for each cluster individually. As before, the SHAP value at each time point is proportional to the opacity of the gray vertical line also shown as a bar graph at left. Figure 5—figure supplement 1: WaveMAP implicitly captures waveform features (such as trough to peak or AP width) without the need for prior specification.

In Figure 5B, we made use of SHAP values to identify which aspects of waveform shape the gradient boosted decision tree classifier utilizes in assigning what waveform to which cluster (Lundberg and Lee, 2017; Lundberg et al., 2020). SHAP values build off of the game theoretic quantity of Shapley values (Shapley, 1988; Štrumbelj and Kononenko, 2014), which poses that each feature (point in time along the waveform) is of variable importance in influencing the classifier to decide whether the data point belongs to a specific class or not. Operationally, SHAP values are calculated by examining the change in classifier performance as each feature is obscured (the waveform’s amplitude at each time point in this case), one-by-one (Lundberg and Lee, 2017). Figure 5B shows the top-10 time points in terms of mean absolute SHAP value (colloquially called ‘SHAP value’) and their location. It is important to note that not every time point is equally informative for distinguishing every cluster individually and thus each bar is subdivided into the mean absolute SHAP value contribution of the eight constituent waveform classes. For instance, the 0.7 ms location is highly informative for cluster ⑤ and the 0.3 ms point is highly informative for cluster ⑦ (Figure 5C).

In the inset is shown all waveforms along with each of the top ten time points (in gold) with higher SHAP value shown with more opacity. The time points with highest SHAP value tend to cluster around two different locations giving us an intuition for which locations are most informative for telling apart the Louvain clusters. For instance, the 0.5 to 0.65 ms region contains high variability amongst waveforms and is important in separating out broad- from narrow-spiking clusters. This region roughly contains the post-hyperpolarization peak which is a feature of known importance and incorporated into nearly every study of EAP waveform shape (see Table 1 in Vigneswaran et al., 2011). Similarly, SHAP values implicate the region around 0.3 ms to 0.4 ms as time points that are also of importance and these correspond to the pre-hyperpolarization peak which is notably able to partition out triphasic waveforms (Barry, 2015). Importance is also placed on the location at 0.6 ms corresponding to the inflection point which is similarly noted as being informative (Trainito et al., 2019; Banaie Boroujeni et al., 2021). These methods also implicate other regions of interest that have not been previously noted in the literature to the best of our knowledge: two other locations are highlighted farther along the waveform at 1.1 and 1.27 ms and are important for differentiating ⑧ and ① from the other waveforms. This result suggests that using only up to 1.0 ms or less of the waveform may obscure diversity.

In Figure 5C, we show the three locations that are most informative for delineating a specific cluster; these appear as gray lines with their opacity proportional to their SHAP importance. These individually informative features often do align with those identified as globally-informative but do so with cluster-specific weights. Put another way, not every time point is equally informative for identifying waveforms individually and these ‘most informative’ parts of each waveform do not always perfectly align with globally informative features. In summary, WaveMAP independently and sensibly arrived at a more nuanced incorporation of the very same features identified in previous work—and several novel ones—using a completely unsupervised framework which obviated the need to specify waveform features.

In the second half of the paper, we investigate whether these clusters have distinct physiological (in terms of firing rate), functional, and laminar distribution properties which could give credence that WaveMAP clusters connect to cell types.

WaveMAP clusters have distinct physiological properties

A defining aspect of cell types is that they vary in their physiology and especially firing rate properties (Mountcastle et al., 1969; McCormick et al., 1985; Connors and Gutnick, 1990; Nowak et al., 2003; Connors et al., 1982; Contreras, 2004). However, these neuronal characterizations via waveform ex vivo are not always conserved when the same waveform types are observed in vivo during behavior (Steriade, 2004; Steriade et al., 1998). To connect our waveform clusters to physiological cell types in vivo, we identified each cluster’s firing rate properties. We performed several analyses using the firing rate (FR) in spikes per second (spikes/s) for each cluster during the decision-making task described in Figure 1.

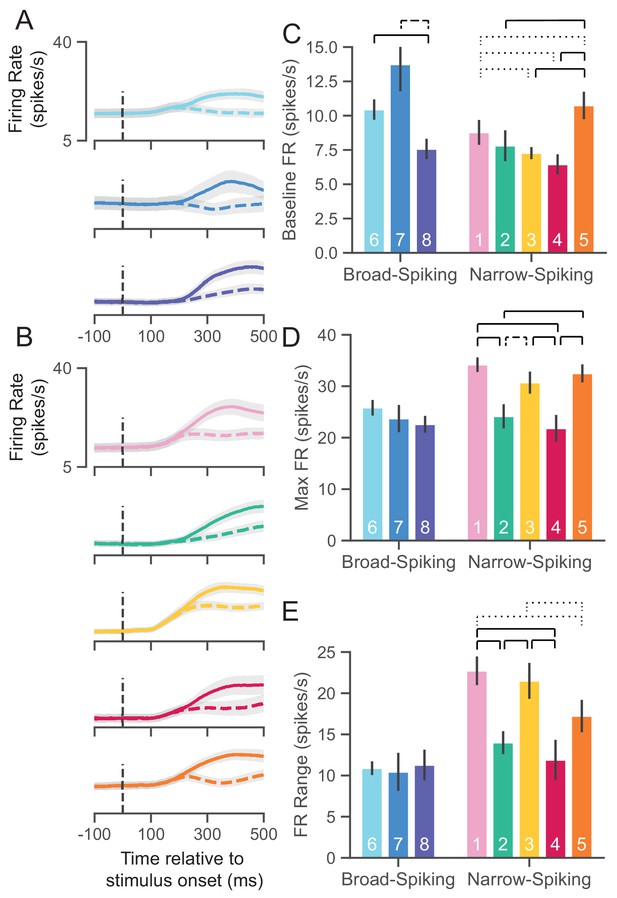

The trial-averaged FRs are aligned to stimulus onset (stim-aligned) and separated into preferred (PREF, solid trace) or non-preferred (NONPREF, dashed trace) reach direction trials. This is shown for both broad- (Figure 6A) and narrow-spiking (Figure 6B) clusters. A neuron’s preferred direction (right or left) was determined as the reach direction in which it had a higher FR on average in the 100 ms time period before movement onset.

UMAP clusters exhibit distinct physiological properties.

(A) Stimulus-aligned trial-averaged firing rate (FR; spikes/s) activity in PMd for broad-spiking WaveMAP clusters. The traces shown are separated into trials for PREF direction reaches (solid traces) and NONPREF direction reaches (dashed traces) and across the corresponding WaveMAP clusters. Shaded regions correspond to bootstrapped standard error of the mean. Dashed vertical line is stimulus-onset time. (B) The same plots as in (A) but for narrow-spiking WaveMAP clusters. (C) Baseline median FR ± S.E.M. for the neurons in the eight different classes. Baselines were taken as the average FR from 200 ms of recording before checkerboard stimulus onset. (D) Median maximum FR ± S.E.M. for the neurons in the eight different clusters. This was calculated by taking the median of the maximum FR for each neuron across the entire trial. (E) Median FR range ± S.E.M. calculated as the median difference, per neuron, between its baseline and max FR. ---- p < 0.05; ---- p < 0.01; ---- p < 0.005; Mann-Whitney U test, FDR adjusted. Figure 6—figure supplement 1: GMM clusters are less physiologically distinguishable than WaveMAP clusters.

To further quantify the FR differences between clusters, we calculated three properties of the FR response to stimulus: baseline FR, max FR, and FR range.

Baseline FR

Cell types are thought to demonstrate different baseline FRs. We estimated baseline FR (Figure 6C) as the median FR across the 200 ms time period before the appearance of the red-green checkerboard and during the hold period after targets appeared for the broad (Figure 6A), and narrow-spiking clusters (Figure 6B). The broad-spiking clusters showed significant differences in baseline FR when compared against the narrow-spiking clusters (p = 0.0028, Mann-Whitney U test). Similar patterns were observed in another study of narrow- vs. broad-spiking neurons in PMd during an instructed delay task (Kaufman et al., 2010). We also found that not all broad-spiking neurons had low baseline FR and not all narrow-spiking neurons had high baseline FR. The broad-spiking clusters ⑥ and ⑦ were not significantly different but both differed significantly from ⑧ in that their baseline FR was much higher (10.3 ± 0.7 and 13.2 ± 1.9 spikes/s vs. 7.6 ± 0.75 spikes/s [median ± bootstrap S.E.]; p = 0.0052, p = 0.0029 respectively, Mann-Whitney U test, FDR adjusted). The narrow-spiking clusters (Figure 6B, right) ②, ③, and ④ had relatively low median baseline FRs (7.5 ± 1.1, 7.4 ± 0.4, 6.5 ± 0.7 spikes/s, median ± bootstrap S.E.) and were not significantly different from one another but all were significantly different from ① and ⑤ (p = 0.04, p = 2.8e-4, p = 2.8e-7, p = 4.9e-5, respectively, Mann-Whitney U test, FDR adjusted; see Figure 6C).

Maximum FR

A second important property of cell types is their maximum FR (Mountcastle et al., 1969; McCormick et al., 1985; Connors and Gutnick, 1990). We estimated the maximum FR for a cluster as the median of the maximum FR of neurons in the cluster in a 1200 ms period aligned to movement onset (800 ms before and 400 ms after movement onset; Figure 6D). In addition to significant differences in baseline FR, broad- vs. narrow-spiking clusters showed a significant difference in max FR (p = 1.60e-5, Mann-Whitney U test). Broad-spiking clusters were fairly homogeneous with low median max FR (24.3 ± 1.0, median ± bootstrap S.E.) and no significant differences between distributions. In contrast, there was significant heterogeneity in the FR’s of narrow-spiking neurons: three clusters (①, ③, and ⑤) had uniformly higher max FR (33.1 ± 1.1, median ± bootstrap S.E.) while two others (② and ④) were uniformly lower in max FR (23.0 ± 1.4, median ± bootstrap S.E.) and were comparable to the broad-spiking clusters. Nearly each of the higher max FR narrow-spiking clusters were significantly different than each of the lower max FR clusters (all pairwise relationships p < 0.001 except ③ to ④ which was p = 0.007, Mann-Whitney U test, FDR adjusted).

FR range

Many neurons, especially inhibitory types, display a sharp increase in FR and also span a wide range during behavior (Kaufman et al., 2010; Kaufman et al., 2013; Chandrasekaran et al., 2017; Johnston et al., 2009; Hussar and Pasternak, 2009). To examine this change over the course of a trial, we took the median difference across trials between the max FR and baseline FR per neuron to calculate the FR range. We again found the group difference between broad- and narrow-spiking clusters to be significant (p = 0.0002, Mann-Whitney U test). Each broad-spiking cluster (⑥, ⑦, and ⑧) had a median increase of around 10.8 spikes/s (10.8 ± 0.8, 10.7 ± 2.3, and 10.9 ± 1.9 spikes/s respectively, median ± bootstrap S.E.) and each was nearly identical in FR range differing by less than 0.2 spikes/s. In contrast, the narrow-spiking clusters showed more variation in their FR range—similar to the pattern observed for max FR. ①, ③, and ⑤ had a large FR range (20.3 ± 1.1 spikes/s, median ± bootstrap S.E.) and the clusters ③ and ④ had a relatively smaller FR range (13.4 ± 1.3 spikes/s, median ± bootstrap S.E.). These results demonstrate that some narrow-spiking clusters, in addition to having high baseline FR, highly modulated their FR over the course of a behavioral trial.

Such physiological heterogeneity in narrow-spiking cells has been noted before (Ardid et al., 2015; Banaie Boroujeni et al., 2021; Quirk et al., 2009) and in some cases, attributed to different subclasses of a single inhibitory cell type (Povysheva et al., 2013; Zaitsev et al., 2009). Other work also strongly suggests that narrow-spiking cells contain excitatory neurons with distinct FR properties contributing to this diversity (Vigneswaran et al., 2011; Onorato et al., 2020).

Furthermore, if WaveMAP has truly arrived at a closer delineation of underlying cell types compared to previous methods, it should produce a ‘better’ clustering of physiological properties beyond just a better clustering of waveform shape. To address this issue, we calculate the same firing rate traces and physiological properties as in Figure 6 but with the GMM clusters (Figure 6—figure supplement 1). While the FR traces maintain the same trends (BS does not increase its FR prior to the split into PREF and NONPREF while NS does; compare to WaveMAP broad-spiking vs. narrow-spiking clusters respectively), much of the significant differences between clusters is lost across all physiological measures even though fewer groups are compared (Figure 6—figure supplement 1B,C and D). We also quantitatively estimate these differences by calculating the effect sizes (Cohen’s f2) across the WaveMAP and GMM clusterings with a one-way ANOVA. The effect size was larger for WaveMAP vs. GMM clustering respectively for every physiological property: baseline FR (0.070 vs. 0.013), maximum FR (0.035 vs. 0.011), and FR range (0.055 vs. 0.034).

WaveMAP clusters have distinct decision-related dynamics

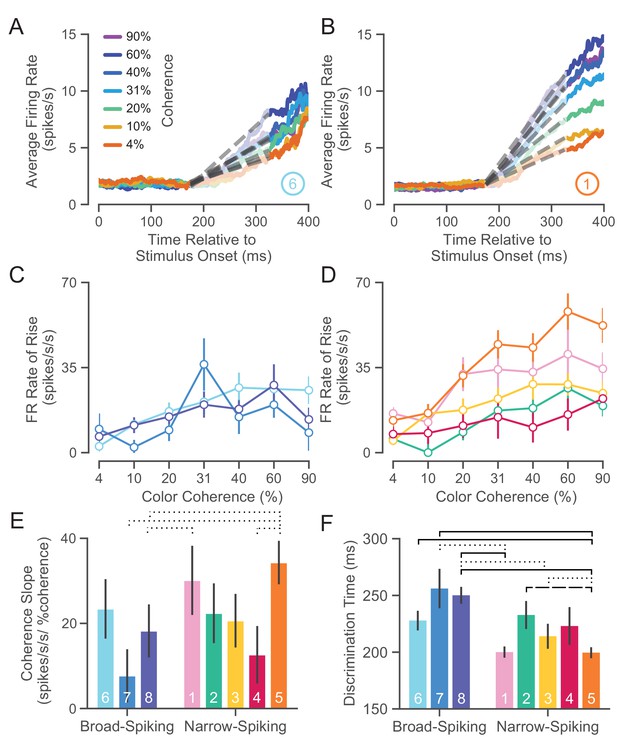

Our analysis in the previous section showed that there is considerable heterogeneity in their physiological properties. Are these putative cell types also functionally different? Prior literature argues that neuronal cell types have distinct functional roles during cortical computation with precise timing. For instance, studies of macaque premotor (Song and McPeek, 2010), inferotemporal (IT) (Mruczek and Sheinberg, 2012), and frontal eye field (FEF) (Ding and Gold, 2012) areas show differences in decision-related functional properties: between broad- and narrow-spiking neurons, narrow-spiking neurons exhibit choice-selectivity earlier than broad-spiking neurons. In the mouse, specific aspects of behavior are directly linked with inhibitory cell types (Pinto and Dan, 2015; Estebanez et al., 2017). Here, we examine the functional properties of each cluster based on two inferred statistics: choice-related dynamics and discrimination time.

Choice-related dynamics

The first property we assessed for these WaveMAP clusters was the dynamics of the choice-selective signal. The neural prediction made by computational models of decision-making (for neurons that covary with an evolving decision) is the build-up of average neural activity in favor of a choice is faster for easier compared to harder color coherences (Chandrasekaran et al., 2017; Ding and Gold, 2012; Roitman and Shadlen, 2002). Build-up activity is measured by analyzing the rate of change of choice-selective activity vs. time. We therefore examined the differences in averaged stimulus-aligned choice-selectivity signals (defined as |left - right|) for different checkerboard color coherences for each cluster.

In Figure 7A and B, we show average choice-selectivity signals across the seven color coherence levels (Figure 7A, legend) for an example broad- (⑥) and narrow-spiking cluster (①). For ⑥ (Figure 7A), easier stimuli (higher coherence) only led to modest increases in the rate at which the choice selectivity signal increases. In contrast, ① (Figure 7B) shows faster rates for the choice-selective signal as a function of coherence. We summarized these effects by measuring the rate of change for the choice-selective signal between 175 and 325 ms for stimulus-aligned trials in each coherence condition (dashed lines in Figure 7A,B). This rate of rise for the choice-selective signal (spikes/s/s) vs. coherence is shown for broad- (Figure 7C) and narrow-spiking (Figure 7D) clusters. The broad-spiking clusters demonstrate fairly similar coherence-dependent changes with each cluster being somewhat indistinguishable and only demonstrating a modest increase with respect to coherence. In contrast, the narrow-spiking clusters show a diversity of responses with ① and ⑤ demonstrating a stronger dependence of choice-related dynamics on coherence compared to the other three narrow-spiking clusters which were more similar in response to broad-spiking neurons.

UMAP clusters exhibit distinct functional properties.

(A) Average firing rate (FR) over time for ⑥ (used as a sample broad-spiking cluster) across trials of different color coherences. The gray-dashed lines indicate the linear regression lines used to calculate the FR rate of rise. (B) Average FR over time for ① (used as a sample narrow-spiking cluster) across different color coherences. (C) FR rate of rise vs. color coherence for broad- and (D) narrow-spiking clusters. Error bars correspond to standard error of the mean across trials. (E) Bootstrapped median color coherence slope is shown with the bootstrapped standard error of the median for each cluster on a per-neuron basis. Coherence slope is a linear regression of the cluster-specific lines in the previous plots C and D. (F) Median bootstrapped discrimination time for each cluster with error bars as the bootstrapped standard error of the median. Discrimination time was calculated as the the amount of time after checkerboard appearance at which the choice-selective signal could be differentiated from the baseline FR (Chandrasekaran et al., 2017). dotted line p < 0.05; dashed line p < 0.01; solid line p < 0.005; Mann-Whitney U test, FDR adjusted.

We further summarized these plots by measuring the dependence of the rate of rise of the choice-selective signal as a function of coherence measured as the slope of a linear regression performed on the rate of rise vs. color coherence for each cluster (Figure 7E). The coherence slope for broad-spiking clusters was moderate and similar to ②, ③, and ④ while the coherence slope for ① and ⑤ was steeper. Consistent with Figure 7C and D, the choice selective signal for ① and ⑤ showed the strongest dependence on stimulus coherence.

Discrimination time

The second property that we calculated was the discrimination time for clusters which is defined as the first time in which the choice-selective signal (again defined as |left - right|) departed from the FR of the hold period. We calculated the discrimination time on a neuron-by-neuron basis by computing the first time point in which the difference in FR for the two choices was significantly different from baseline using a bootstrap test (at least 25 successive time points significantly different from baseline FR corrected for multiple comparisons Chandrasekaran et al., 2017). Discrimination time for broad-spiking clusters (255 ± 94 ms, median ± bootstrap S.E.) was significantly later than narrow-spiking clusters (224 ± 89 ms, p < 0.005, median ± bootstrap S.E., Mann-Whitney U test). Clusters ① and ⑤, with the highest max FRs (34.0 ± 1.4 and 33.0 ± 1.8 spikes/s, median ± S.E.) and most strongly modulated by coherence, had the fastest discrimination times as well (200.0 ± 4.9 and 198.5 ± 4.9 ms, median ± S.E.).

Together the analysis of choice-related dynamics and discrimination time showed that there is considerable heterogeneity in the properties of narrow-spiking neuron types. Not all narrow-spiking neurons are faster than broad-spiking neurons and choice-selectivity signals have similar dynamics for many broad-spiking and narrow-spiking neurons. ① and ⑤ have the fastest discrimination times and strongest choice dynamics. In contrast, the broad-spiking neurons have uniformly slower discrimination times and weaker choice-related dynamics.

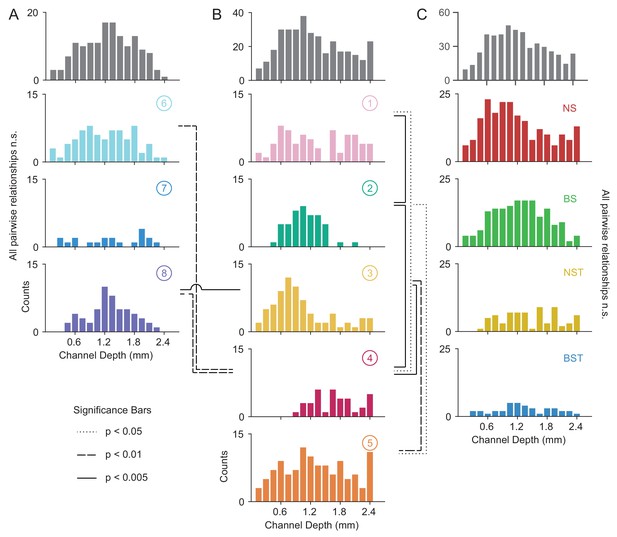

WaveMAP clusters contain distinct laminar distributions

In addition to having certain physiological properties and functional roles, numerous studies have shown that cell types across phylogeny, verified by single-cell transcriptomics, are defined by distinct patterns of laminar distribution in cortex (Hodge et al., 2019; Tosches et al., 2018). Here, we examined the laminar distributions of WaveMAP clusters and compared them to laminar distributions of GMM clusters. The number of waveforms from each cluster was counted at each of sixteen U-probe channels separately. These channels were equidistantly spaced every 0.15 mm between 0.0 and 2.4 mm. This spanned the entirety of PMd which is approximately 2.5 mm in depth from the pial surface to white matter (Arikuni et al., 1988). However, making absolute statements about layers is difficult with these measurements because of errors in aligning superficial electrodes with layer I across different days. This could lead to shifts in estimates of absolute depth; up to 0.15 mm (the distance between the first and second electrode) of variability is induced in the alignment process (see Materials and methods). However, relative comparisons are likely better preserved. Thus, we use relative comparisons to describe laminar differences between distributions and in comparison to anatomical counts in fixed tissue in later sections.

Above each column of Figure 8A and B are the laminar distributions for all waveforms in the associated set of clusters (in gray); below these are the laminar distributions for each cluster set’s constituent clusters. On the right (Figure 8C), we show the distribution of all waveforms collected at top in gray with each GMM cluster’s distribution shown individually below.

Laminar distribution of WaveMAP waveform clusters.

(A, B) The overall histogram for the broad- and narrow-spiking waveform clusters are shown at top across cortical depths on the left and right respectively (in gray); below are shown histograms for their constituent WaveMAP clusters. These waveforms are shown sorted by the cortical depth at which they were recorded from the (0.0 mm [presumptive pial surface] to 2.4 mm in 0.15 mm increments). Broad-spiking clusters were generally centered around middle layers and were less distinct in their differences in laminar distribution. Narrow-spiking clusters are shown on the right and were varied in their distribution with almost every cluster significantly varying in laminar distribution from every other. (C) Depth histograms for all waveforms collected (top, in gray) and every GMM cluster (below). dotted line p < 0.05; dashed line p < 0.01; solid line p < 0.005; two-sample Kolmogorov-Smirnov Test, FDR adjusted. Figure 8—figure supplement 1: Composite figure showing each WaveMAP cluster with waveform, physiological, functional, and laminar distribution properties.

The overall narrow- and broad-spiking populations did not differ significantly according to their distribution (p = 0.24, Kolmogorov-Smirnov test). The broad-spiking cluster set of neurons (⑥ , ⑦ , and ⑧) are generally thought to contain cortical excitatory pyramidal neurons enriched in middle to deep layers (Nandy et al., 2017; McCormick et al., 1985). Consistent with this view, we found these broad-spiking clusters (Figure 8A) were generally centered around middle to deep layers with broad distributions and were not significantly distinguishable in laminarity (all comparisons p > 0.05, two-sample Kolmogorov-Smirnov test, FDR adjusted).

In contrast, narrow-spiking clusters (Figure 8B) were distinctly varied in their distribution such that almost every cluster had a unique laminar distribution. Cluster ① contained a broad distribution. It was significantly different in laminar distribution from clusters ② and ④ (p = 0.002 and p = 0.013, respectively, two-sample Kolmogorov-Smirnov, FDR adjusted).

Cluster ② showed a strongly localized concentration of neurons at a depth of 1.1 ± 0.33 mm (mean ± S.D.). It was significantly different from almost all other narrow-spiking clusters (p = 0.002, p = 1e-5, p = 0.010 for ①, ④, and ⑤ respectively; two-sample Kolmogorov-Smirnov test, FDR adjusted). Similarly, cluster ③ also showed a strongly localized laminar distribution but was situated more superficially than ② with a heavier tail (1.0 ± 0.6 mm, mean ± S.D.).

Cluster ④ was uniquely deep in its cortical distribution (1.70 ± 0.44, mean ± S.D.). These neurons had a strongly triphasic waveform shape characterized by a large pre-hyperpolarization peak. These waveforms have been implicated as arising from myelinated excitatory pyramidal cells (Barry, 2015), which are especially dense in this caudal region of PMd (Barbas and Pandya, 1987).

The last cluster, ⑤, like ① was characterized by a broad distribution across cortical depths unique among narrow-spiking neurons and was centered around a depth of 1.3 ± 0.65 mm (mean ± S.D.) and present in all layers (Arikuni et al., 1988).

Such laminar differences were not observed when we used GMM clustering. Laminar distributions for BS, BST, NS, and NST did not significantly differ from each other (Figure 8C; BS vs. BST had p = 0.067, all other relationships p > 0.2; two-sample Kolmogorov-Smirnov test, FDR adjusted). Each GMM cluster also exhibited broad distributions across cortex which is at odds with our understanding of cell types using histology (discussed in the next section).

Some narrow-spiking WaveMAP cluster laminar distributions align with inhibitory subtypes

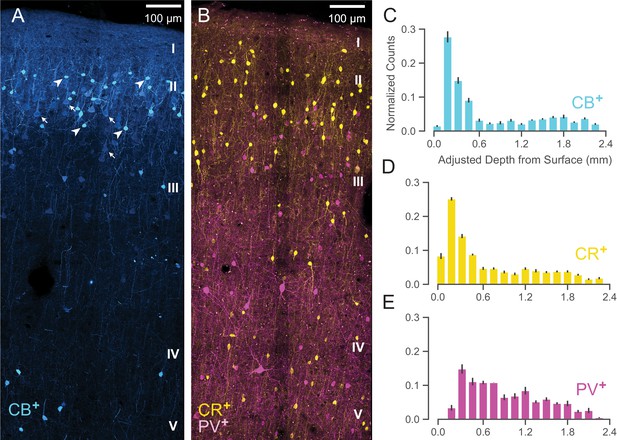

We have shown that WaveMAP clusters have more distinct laminarity than GMM clusters. If WaveMAP clusters are consistent with cell type, we should expect their distributions to be relatively consistent with distributions from certain anatomical types visualized via immunohistochemistry (IHC). An especially well-studied set of non-overlapping anatomical inhibitory neuron types in the monkey are parvalbumin-, calretinin-, and calbindin-positive GABAergic interneurons (PV+, CR+, and CB+ respectively) (DeFelipe, 1997). Using IHC, we examined tissue from macaque rostral PMd stained for each of these three interneuron types. We then conducted stereological counting of each type averaged across six exemplars to quantify cell type distribution across cortical layers (see Figure 9A and B, Schmitz et al., 2014) and compared it to the distributions in Figure 8.

Anatomical labeling of three inhibitory interneuron types in PMd.

(A) Sample maximum intensity projection of immunohistological (IHC) staining of rostral PMd calbindin-positive (CB+) interneurons in blue. Note the many weakly-positive excitatory pyramidal neurons (arrows) in contrast to the strongly-positive interneurons (arrowheads). Only the interneurons were considered in stereological counting. In addition, only around first 1.5 mm of tissue is shown (top of layer V) but the full tissue area was counted down to the 2.4 mm (approximately the top of white matter). Layer IV exists as a thin layer in this area. Layer divisions were estimated based on depth and referencing Arikuni et al., 1988 (Arikuni et al., 1988). (B) Sample maximum intensity projection of IHC staining of PMd calretinin-positive (CR+) and parvalbumin-positive (PV+) interneurons in yellow and fuschia respectively. The same depth of tissue and layer delineations were used as in (A). (C, D, E) Stereological manual counts (Schmitz et al., 2014) (mean ± S.D.) of CB+, CR+, PV+ cells in PMd, respectively. Counts were collected from six specimens, each with all three IHC stains, and with counts normalized to each sample. Source files for this figure are available on Dryad (https://doi.org/10.5061/dryad.z612jm6cf).

Both CB+ and CR+ cells (Figure 9C and D, respectively) exhibited a similarly restricted superficial distribution most closely resembling ③. In addition, CR+ and CB+ cells are known to have very similar physiological properties and spike shape (Zaitsev et al., 2005). An alternative possibility is that one of CR+ or CB+ might correspond to ② and the other to ③ but this is less likely given their nearly identical histological distributions (Figure 9C and D) and similar physiology (Zaitsev et al., 2005).

In contrast, WaveMAP cluster ①, had laminar properties consistent with PV+ neurons (Figure 9B): both were concentrated superficially but proliferated into middle layers (Figure 9E). In addition, there were striking physiological and functional similarities between ① and PV+ cells. In particular, both ① and PV+ cells have low baseline FR, early responses to stimuli and robust modulation of FR similar to PV+ cells in mouse M1 (Estebanez et al., 2017). Cluster ⑤ also had similar properties to ① and could also correspond to PV+ cells.

Together, these results from IHC suggest that the narrow-spiking clusters identified from WaveMAP potentially map on to different inhibitory types.

Heterogeneity in decision-related activity emerges from both cell type and layer

Our final analysis examines whether these WaveMAP clusters can explain some of the heterogeneity observed in decision-making responses in PMd over and above previous methods (Chandrasekaran et al., 2017). Heterogeneity in decision-related activity can emerge from cortical depth, different cell types within each layer, or both. To quantify the relative contributions of WaveMAP clusters and cortical depth, we regressed discrimination time on both separately and together and examined the change in variance explained (adjusted ). We then compared this against the GMM clusters with cortical depth to show that WaveMAP better explains the heterogeneity of decision-related responses.

We previously showed that some of the variability in decision-related responses is explained by the layer from which the neurons are recorded (Chandrasekaran et al., 2017). Consistent with previous work, we found that cortical depth explains some variability in discrimination time (1.7%). We next examined if the WaveMAP clusters identified also explained variability in discrimination time: a categorical regression between WaveMAP clusters and discrimination time, explained a much larger 6.6% of variance. Including both cortical depth and cluster identity in the regression explained 7.3% of variance in discrimination time.

In contrast, we found that GMM clusters regressed against discrimination time only explained 3.3% of variance and the inclusion of both GMM cluster and cortical depth only explained 4.6% of variance.

Thus, we find that WaveMAP clustering explains a much larger variance relative to cortical depth alone. This demonstrates that WaveMAP clusters come closer to cell types than previous efforts and are not artifacts of layer-dependent decision-related inputs. That is, both the cortical layer in which a cell type is found as well WaveMAP cluster membership contributes to the variability in decision-related responses. Furthermore, WaveMAP clusters outperform GMM clusters as regressors of a functional property associated with cell types. These results further highlight the power of WaveMAP to separate out putative cell types and help us better understand decision-making circuits.

Discussion

Our goal in this study was to further understand the relationship between waveform shape and the physiology, function , and laminar distribution of cell populations in dorsal premotor cortex during perceptual decision-making. Our approach was to develop a new method, WaveMAP, that combines a recently developed non-linear dimensionality reduction technique (UMAP) with graph clustering (Louvain community detection) to uncover hidden diversity in extracellular waveforms. We found this approach not only replicated previous studies by distinguishing between narrow- and broad-spiking neurons, but did so in a way that (1) revealed additional diversity, and (2) obviated the need to examine particular waveform features. In this way, our results demonstrate how traditional feature-based methods obscure biological detail that is more faithfully revealed by our WaveMAP method. Furthermore, through interpretable machine learning, we show our approach not only leverages many of the features already established as important in the literature but expands upon them in a more nuanced manner—all with minimal supervision or stipulation of priors. Finally, we show that the candidate cell classes identified by WaveMAP have distinct physiological properties, decision-related dynamics, and laminar distribution. The properties of each WaveMAP cluster are summarized in Figure 8—figure supplement 1A and B for broad- and narrow-spiking clusters, respectively.

WaveMAP combines UMAP with high-dimensional graph clustering and interpretable machine learning to better identify candidate cell classes. Our approach might also be useful in other domains that employ non-linear dimensionality reduction such as computational ethology (Ali et al., 2019; Hsu and Yttri, 2020; Bala et al., 2020), analysis of multi-scale population structure (Diaz-Papkovich et al., 2019), and metascientific analyses of the literature (Noichl, 2021). We also note that while traditional uses of non-linear dimensionality reduction and UMAP has been to data lacking autoregressive properties, such as transcriptomic expression (Becht et al., 2019), this does not seem to be an issue for WaveMAP. Even though our waveforms have temporal autocorrelation, our method still is able to pick out interesting structure. Other work has found similar success in analyzing time series data with non-linear dimensionality reduction (Sedaghat-Nejad et al., 2021; Dimitriadis et al., 2018; Jia et al., 2019; Gouwens et al., 2020; Ali et al., 2019).

Advantages of WaveMAP over traditional methods

At the core of WaveMAP is UMAP which has some advantages over other non-linear dimensionality reduction methods that have been applied in this context. Although most algorithms offer fast implementations that scale well to large input dimensionalities and volumes of data (Linderman et al., 2019; Nolet et al., 2020), UMAP also projects efficiently into arbitrary output dimensionalities while also returning an invertible transform. That is, we can efficiently project new data into any arbitrary dimensional projected space without having to recompute the mapping.

These properties provide three advantages over other non-linear dimensionality reduction approaches: First, our method is stable in the sense that it produces a consistent number of clusters and each cluster has the same members across random subsamples (Figure 3—figure supplement 1B). Clustering in the high-dimensional space rather than the projected space lends stability to our approach. Second, it allows exploration of any region of the projected space no matter the intuited latent dimensionality—this yields an intuitive understanding of how UMAP non-linearly transforms the data, which might be related to underlying biological phenomena. Thus, UMAP allows WaveMAP to go beyond a ‘discriminative model’ typical of other clustering techniques and function as a ‘generative model’ with which to make predictions. Third, it enables cross-validation of a classifier trained on cluster labels, impossible with methods that don’t return an invertible transform. To cross-validate unsupervised methods, unprocessed test data must be passed into a transform computed only on training data and evaluated with some loss function (Moscovich and Rosset, 2019). This is only possible if an invertible transform is admitted by the method of dimensionality reduction as in UMAP.

A final advantage of UMAP is that it inherently allows for not just unsupervised but supervised and semi-supervised learning whereas some other methods do not (Sainburg et al., 2020). This key difference enables ‘transductive inference’ which is making predictions on unlabeled test points based upon information gleaned from labeled training points. This opens up a diverse number of novel applications in neuroscience through informing the manifold learning process with biological ground truths (in what is called ‘metric learning’) (Bellet et al., 2013; Yang and Jin, 2006). Experimentalists could theoretically pass biological ground truths to WaveMAP as training labels and ‘teach’ WaveMAP to produce a manifold that more closely hews to true underlying diversity. For instance, if experimentalists ‘opto-tag’ neurons of a particular cell type (Roux et al., 2014; Deubner et al., 2019; Jia et al., 2019; Cohen et al., 2012; Hangya et al., 2015), this information can be passed along with the extracellular waveform to WaveMAP which would, in a semi-supervised manner, learn manifolds better aligned to biological truth.

A learned manifold could also be useful in future experiments to identify cell types in real-time without opto-tagging. This could be done by projecting the averaged waveforms found within an experiment into the learned WaveMAP manifold. This method would be especially useful in a scenario in which the number of electrodes exceeds the number of channels available to record from simultaneously and not all cell types are of equal interest to record (e.g. Neuropixels probes which have 960 electrodes but simultaneously record from only 384; Trautmann et al., 2019; Jun et al., 2017). We believe this is a rich area that can be explored in future work.